Increasing rate of experimentation

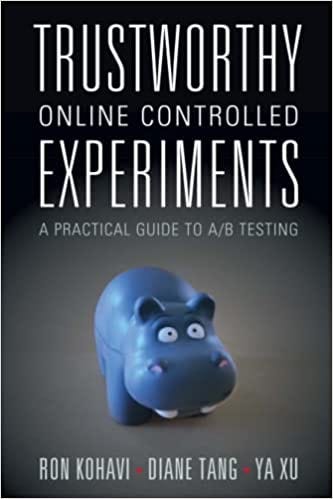

I am a big fan of Ronny Kohavi. His book “Trustworthy Online Controlled Experiments: A Practical Guide to A/B Testing” should literally be considered a bible for any startup trying to scale up on their experimentation. In this post, I don’t want to give a summary of the book in any way. I am, however, going to give some complimentary points which might help teams which are planning to scale up on experimentation.

There are many kinds of backend/frontend/ML algorithm driven experiments are conducted by companies. My concentration in the post will be majorly on the ML algorithm/Decision sciences/Product heuristic based experiments. Here are some of my most important takeaways in the process of increasing experiments 10X:-

Mindset of experimentation - There needs to be acceptance across the board that we don’t know enough about the consumer, and hence anything(which is not outrageous) can work. You can, at times, find product managers and even data team members making sweeping statements about the consumer. While, smart experimentation, which rules out experiments which create extremely bad consumer experience is good, but the habitual push of not trying out things which are 50:50 is extremely bad. The mindset change might need a push from the top leadership.

Basic tools for setting up experiments - Providing basic tooling around the set up of experimentation is extremely important in giving the experimentation rate a push. For example, Creating tooling which determines things such as - what sample size is needed for a 80% power and 99% significance level for a particular kind of experiment. This is simple and yet a big win. Understanding how these numbers work is a massive win but not easy to push across teams.

Standard set of metrics is extremely important -

Photo by Austin Distel on Unsplash I would not delve too much into this one but an understanding of what metric to measure (Overall Evaluation Criterion), what are the guardrail metrics, and what are possible pitfalls such as Sample Ratio Mismatch is extremely important. Understanding statistical significance and practical significance is hygiene. For the details of all these concepts, please read the book. At an overall level, metrics are important and the metrics must be agreed upon between the ML team, analytics engineering team, the product team, and whoever else is involved in the decision making process. A clear definition and the understanding of the current baselines of those metrics is much desirable.

Standardized dashboards ,standardized analysis, and standard reporting structure is the key - One of the problems across multiple startups is the adhoc analysis of experiments. Not enough startups are ready to invest this one time effort. It does pay off. The problem with doing adhoc analysis is the inertia caused by it amongst each person that is doing the experiment. There is human time getting consumed for repeatable analysis. Assuming, you have a team of ML Scientists doing end to end work, this is indeed super expensive time. Additionally, building such standardized dashboards and analysis can show you some of the glaring problems in your instrumentation. As time goes on, keep adding additional things to you dashboard but a baseline dashboard which measure important metrics is extremely important as you are ramping up on experimentation. For the junior members of the team, it is also a great way to push metric ownership rather than model ownership. Get everyone to speak up about metrics of their experiments up front in the daily standup calls.

Track offline, online metrics, service health metrics at the same place - This might seem a like a simple obvious thing but in reality a very few teams do it. Once you start tracking all the offline and online metrics at the same place, it becomes easier for the conductor of experiment to keep a constant look at this one dashboard. Also, the tracking offline and online metrics can be useful when you are trying to correlate your offline metrics with the online metrics. I can assure you that finding a decent correlation between offline and online metrics is extremely difficult. Decision makers love such correlation (An established causation can be even better) as it (almost) guarantees business rewards. Service heath metrics should ideally be tracked on the same dashboard. Service health metrics can be things such uptime, latency, NULL values in your response(in case NULL’s are not expected), input data metrics such as inputs coming as NULL etc. Putting everything at the same place makes the experiment conductor feel complete ownership of the experiment.

Source - https://towardsdatascience.com/track-and-organize-your-ml-projects-e44e6c7c3f9d

Simplification of contracts can lead to democratization of experimentation - A simple contract of how data exchange will happen between the underlying experiment system and the backend can help multiple teams carry out experiments. Creating a complicated contract can push the load of experimentation on a single team which can adhere to a real time inferencing contract. A bonus is - Creating a simple offline evaluation script which even a semi-tech employee can run to evaluate her/his hypothesis. This can be similar to the Kaggle leaderboards for the solution being evaluated. This can help a team filter out extremely sub-standard solutions before they hit production.

User bucketing -

Source of image - https://towardsdatascience.com/the-essential-guide-to-sample-ratio-mismatch-for-your-a-b-tests-96a4db81d7a4

This seems like an obvious thing to have but having talked to multiple data leaders in the industry, this seems to be a problem. Having equal users buckets which have similar baseline performances is not done. A clean control group of users doesn’t exist. This, to my mind, is hygiene. This, and rehashing users every once in a while so that any kind of residual effect can be ruled(almost) out. Running an AA experiment is even better and highly recommended. For many companies, there can be network effects and creating equal user buckets through cluster sampling is required - That is an advanced topic which I would cover in another post.

Track the rate of experimentation and set goals around it -

You can’t improve what you can’t measure. So, in order to improve velocity of experimentation, it is highly recommended to track the rate of experimentation. For this, one must define what constitutes an experiment and then track the same. Measures can be as simple as number of experiments per person per team or something more complicated. Once you start tracking the rates, it is not a bad idea to set goals of making it 2X every quarter or whatever feels suitable. There can also be a trajectory of having slower experimentation in newer business areas which have signed up for experimentation recently and faster experimentation rate benchmarks for the mature business areas. For ML experiment tracking Neptune.ai, Weights & Biases are great tools.

Knowledge sessions on experimentation - Training sessions around experimentation and pushing a lot of literature around it on you slack/teams channels are great ways to further push for experimentation. Knowledge sessions are great places to bring in your senior leadership and ask them to push experimentation as the livelihood of the company.

I hope you find my newsletters useful. Please subscribe to my newsletters to keep me motivated about writing.

Hey Arkid, your posts are a hidden treasure for anyone who’s starting out in Rec /ad systems and want to make a career out of it. Please do keep writing if you can! :)

Superb Read!! Very informative yet simple to understand!! Thank you for this brilliantly articulated newsletter!! Will look forward to more such newsletters in the near future.