On Machine Learning teams

ML team productivity, hiring, skillsets, motivation etc

Talking with a lot of technology startups, I come across this weird question about the productivity of ML teams. There is a set of questions which are very common and hence it is a good time for me to try and answer them in one newsletter. I will definetely end up missing a few of the questions and hence comments are more than welcome. Here is what I would like to cover:-

When should we hire Machine Learning Scientists?

How many ML scientists do we need?

What should be the skillset of a ML scientist?

What should be the interview process for ML scientists?

How much time should be spent on ML research by the team?

What is ML tech debt? What is the importance of ML tech debt?

What is the iterative process that ML teams need to get into?

Do Sprints make sense for ML teams?

Should the MLOps team be separate and what should be their role?

How should we measure the productivity of the ML teams?

How should we keep the ML teams motivated during the various phases of the company?

I am sure that a lot of you have faced very similar questions and hence I will try and give some informed opinion about the same.

When should we hire Machine Learning Scientists?

You should not, till there is a need for the same. In case, you are a company whose primary product is Machine Learning, you might want to hire ML scientists up front but otherwise wait on. In case, you are a ML company, I would guess that the founders are themselves doing some amount of ML work and hence hiring decisions should be fairly sorted. However, if you are a normal B2C or B2B company, there is no reason for you to hire a ML scientist. It is fairly similar to the first rule of Machine Learning, which is to do no Machine Learning. Basically, you don’t hire a ML scientist till you have a real use case which can’t be solved without ML. A basic but bad thumb-rule is - “Are moving averages not good enough?”. If you Analytics Engineering, or Data Engineering team can solve the problem in some ways to a fairly good extent, you don’t need a ML scientist. If you hire a ML scientist at this stage without a good problem to solve, you will not only waste a lot of money but the person herself/himself will leave your company soon. As soon as you have a problem where your basic descriptive rules don’t work, it seems that you might have a use case to first couple of ML hires. Some of the use cases where you might hit this point - Generative Models, Decisions under uncertainty, Recommendation Systems, Fraud Detection systems, a combination of forecasting and control etc. Usually, somewhere around Series B/C is my educated guess about the time of hiring ML scientists.

How many ML scientists do we need?

This depends on the kind of company that you are. If you are a very ML heavy company then the answer is obvious. For a normal(B2C/B2B) company, I don’t think it should be more than [2-3] x (no of use-cases). This is a basic rule of thumb but it can be more or less depending on how complicated your use-case is. In some ways a ratio of 1:2:4 between ML scientists, Data Engineers, and Analytics Engineers is better thumb rule. This also depends on the kind of ML scientists that you hire. This will be answered in the next question.

What should be the skillset of a ML scientist and how do I make an informed choice on their salaries?

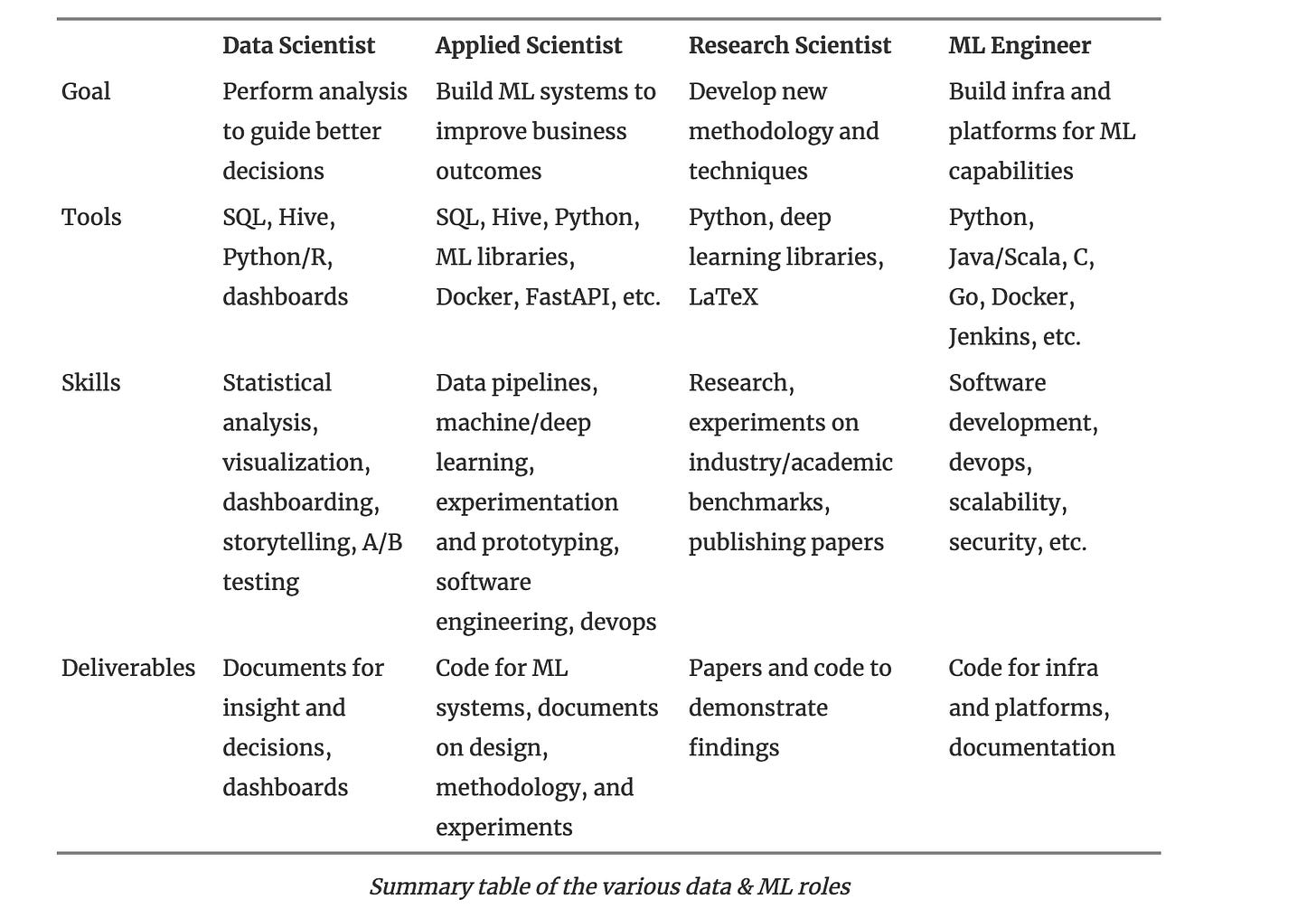

This is the difficult one and again depends on how complicated your use case is. I would highly recommend you to research the various ML roles that are there in the industry. If you are not a very research heavy company, my choice would be to go for the end to end kind which is described in the linked URL as Applied Scientists. This is in case you have a decent enough problem statement or else go back to the first question. I am massive believer in the end to end nature of the ML scientist job. The end to end nature can lead to massive productivity gains.

Source - https://eugeneyan.com/writing/data-science-roles/

ML Engineers are same as Software Engineer (ML) whose job is to build infrastructure for the ML scientists to function. I would put a very thin line between ML Engineers and MLOps and might not want a separate team for both. MLOps teams do some POC’s on the ML Platform built by the ML Engg or SWE(ML) and hence an end to end MLE/MLOps teams is more desirable.

Applied Scientists (or what you might want to call full stack) would usually be more expensive in the market than a MLE or SWE(ML). SWE(ML)’s or MLE’s have the same pay scales as SWE’s. The data scientists mentioned in this table are same as analytics engineers or data analysts(at scale). So, make an informed choice around who all you want in the team basis the stage of the company. At a series B stage, I would go with Applied Scientists, Data Engineers, and Analytics Engineers(or Analysts) for a data team. At a later stage, as your ML models start to make the impact and your scale grows fast, you might want to start hiring for MLOps/MLE’s. Hiring research scientists depends on the company strategy and should most likely happens after Series D/E or even pre/post IPO depending on how research heavy your product is.

What should be the interview process for ML scientists?

Chip Huyen has an extremely extensive guide about ML hiring. However, assuming you are hiring for ML scientists (Applied Scientists), my process would be:-

An overview round with a senior ML leader/data leader to understand the aspirations of the person and to understand if the person is a brilliant jerk. Don’t hire a brillaint jerks. Doesnt work for ML teams, doesnt work for anyone. This is also the primary place where you should be able to convince the ML scientist about the kind of work that you have

A coding round which can have things such coding some basic ML algorithms from scratch, writing some quality code(depends on what level you are hiring for).

I am not a massive believer in Leetcode for ML Scientists as it is way more important for the ML person to understand the internals of ML algorithms than inverting a binary tree.

A breath round where the person can be asked questions on the basics of ML algorithms. This round should include basics of Statistics, Experiment design etc

A depth round where you can discuss either a paper published by the person or some use case solved by her/him. This round is very important in gauging whether you are force fitting someone into a end to end ML Scientist role

An optional ML systems round for the more experienced hires.

If you have any leadership rounds, you can have them here or the first round should have been enough to gauge the person at starting levels

A big red flag in hiring Engineering Manager’s for ML team is the EM not being as good as your ML Scientist 1 or ML Scientist 2. A product generalist kind of EM can demotivate your ML team and can cause attrition. In the previous question, the role described as data scientist/data analyst does the product generalist/analyst role much better.

How much time should be spent on ML research by the team?

My short answer is - As much as you can without hurting the goals of the ML product you are building within the product. In some ways, I would say - Very little till the Series B/C stage is not a big problem. However, doing some research helps build a brand around your ML team and hence trying to publish in decent conferences(even ML blogs) might help you hire. These publications also tend to give a high to the team and help retain them. At the initial stages, 20:80 ratio between long term research work and getting stuff done is a decent rule and most ML people will be more than okay with it. As you become more mature as a company, the time spent on research can increase. If your problem statement is inherently something which hasn’t been solved, this percentage of research might go higher.

What is ML tech debt? What is the importance of ML tech debt?

Here comes the elephant in the room. Also, here comes the one idea which is not understood very well in most ML teams. Medium to large sized ML teams carry immense amount of tech debt without realizing it. Tech debt comes in many forms such as cluttered pipelines with absolute shameless bad coding practices, absence of commons libraries which can be used by multiple projects/teams, absence of common dashboards/monitoring, badly structured repositories, and at a later stage absence of any MLOps practices. It is not a bad idea to take out a couple of sprints to take care of all the debts that exist in the system. This will lead to a much higher productivity of the ML team in further sprints. This tech debt period should also be a time for the ML teams to strengthen up their processes. Some amount of ML road mapping during this period is a good idea too.

What is the iterative process that ML teams need to get into?

The idea of iterative development in ML teams is as fundamental as agile in software development. A lot of young ML scientists enter the industry dreamy eyed thinking everything that they learn in their colleges/courses will work right away. A few failures and a lack of mentorship can create chaos in the mind of a ML scientist. However, a few failures and someone who can guide the team towards the iterative process of ML development can take the team a long way. The iterative process consists of creating a baseline, which can be basis heuristics or descriptive analytics or moving averages/KNN kind of model which can show some upside(even downside) when compared to the control group. Once, such a baseline is in place, the ask is for the ML teams to build models which can beat the baselines. The ask should also be to choose a family of models on which multiple improvements are possible. For example, model performance analysis to find feature transformations or new features (the most fundamental way) is one way path to take for the next iteration of the model. There can be other ways such as using class weights in a different way or using some other cost function etc. However, the one important indicator for reaching such an iterative stage is that by the time you are deploying the current version of the model you already know what you want to try in the further iteration. If you are jumping model families every 2 weeks, you are most probably doing it wrong. Once, you hit the iterative stage, teams tend to get very far, very fast. Also, parallel paths about what other family of models(or even family of features) can work will start springing up very soon. With a mid sized (6-10) ML team, multiple paths and multiple problem statements can be handled.

A word of caution here - The iterative mindset when pushed too hard can cause the ML teams (especially the less experienced ones) to stop thinking creatively. Newer ideas might stop coming and people can uni-dimesionally work on iterations of the same path. One possible approach is to have 20% of the team separated out to work on newer possible paths, at all times. This 20% of the team needs to be rotated such that every one gets a chance to work on non-linear ideas of solving problems. If these non-linear ideas can beat the current best models or be even close to a top model, they should be made a part of the iterations.

Do Sprints make sense for ML teams?

Absolutely. If you ML team is not working in sprints, the output can be expected to be very average. ML teams can diverge very far away from the problem statement at hand.

Length of a sprint, however, can be anywhere between 2 and 4 weeks depending on the stage of the ML team. Boxed sprint plans help the iterative process of ML development much better, as discussed in the previous question. Without such boxing up of what to deliver in the current sprint, ML teams can be prone to wandering around the ML wonderland and expecting the one big thing to work.

The definition of done in a sprint for the ML teams, however, has to be more nuanced. A guaranteed metric movement will be very hard to put as a definition of done and hence definition around experiment rollouts is better off.

I also like the idea of internally publishing the work done by the team at the end of every sprint. The first iteration of an iterative path can be more than a sprint long as the first version can take some time. However, I don’t see any reason why it should take more than 2 sprints to get the first version out.

Should the MLOps team be separate and what should be their role? - Call it the ML Platform team

The role of an MLOps platform and a MLOps team becomes more prominent as 1 or 2 of your Machine Learning API’s starts to scale and can’t be handled by the end-to-end ML scientists alone. They might end up spending all their time in complicated MLOps set ups.

The MLOps team, to my mind, can be combined with the MLE team which is supposed to build and support the ML platform which is needed for heavy ML workloads. They should also be able to do small tweaks to the existing ML models as well as very good at building resilient ML pipelines. Building a good ML platform which increased ML developer productivity is really hard and is worth its weight in gold.

In a way, the threefold job of the MLOPs(ML Platform team) are:- (I would rather call it the ML Platform team)

Build, maintain, and support the ML Platform. This is way harder than it sounds. I don’t see any way in which ML orchestrations will become too easy any time soon. There will be a continuous need to develop complicated components which can provide abstractions for data intensive applications

Incorporate Software engineering workflows in to the ML teams workflows and support the ML scientists with the same

Take some existing models(add some more complexity if possible) and demo how such a model can work at 10X the current scale with expected latencies

Make the movement of the ML API’s into a truly CI/CD/CM(continuous monitoring) environment

How should we measure the productivity of the ML teams?

Rate of experimentation is a good measure of the productivity of the ML team. What is an experiment - should however be very clearly documented so that logging experiments is very clear. There have, however, been concerns about various teams hacking measures around rate of experimentation. I would however, encourage an experiment log which consists of the following fields

Experiment ID

Timestamp of rollout

Experiment Name

Name of Base Experiment (In case, it is a part of some iteration)

Rollout/Rollback (whether it was rolled out or rolled back)

Offline Metrics

Online Performance

Comments about the experiment (Written properly, this can be made searchable)

How should we keep the ML teams motivated during the various phases of the company?

ML teams can often be demotivated because of multiple reasons such as lack of challenging work (when you have hired a ML team too early without having a real use case for them), lack of enough work (when you have too large a ML team and very few use cases), senior leadership which doesn’t have enough respect for data, senior ML team members become too hung up on MLOps alone, hiring Engineering Managers who are incompetent etc. Some of my pointers for keeping ML scientists motivated are:-

Make them love metrics more than models

Create an environment of learning in which people learn from each other. Have paper reading sessions, blog sharing channels, discussions around ML etc

Create end to end teams instead of separate teams for pipelining(optimization of pipelines), deployment etc

Bring in statistical rigor to the analysis of models. Fresh ML scientists sometimes can be very ignorant of statistics. Bringing in rigor not only helps the analysis but also makes the ML scientist feel challenged

Learning budgets are important. Inspiring ML scientists to read books and take courses is a great way of keeping them motivated.

Partnerships with academia and publishing some work goes a long way in adding to the motivation

I hope you find my newsletters useful. Please subscribe to my newsletters to keep me motivated about writing.